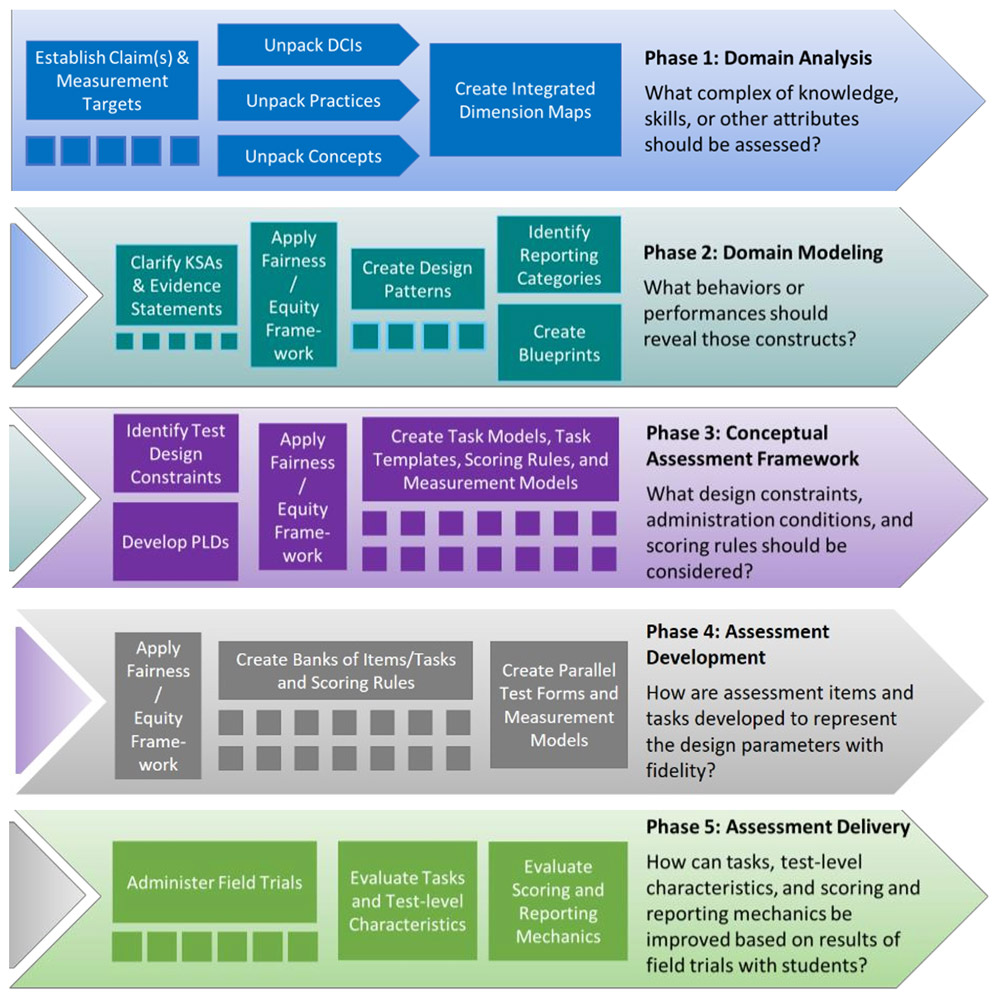

A Five-Phase Principled Assessment Design Approach

The SIPS Assessments project uses a five-phase principled assessment design approach to design assessments that focus on the inferences stakeholders wish to make based on test scores. If assessment information (i.e., sub-scores, total scores, etc.) is expected to have value and usefulness for educators, then early in the design phase assessment developers must have a clear sense of how the test scores will be used to support inferences about student achievement and to inform science teaching and learning in schools. Our disciplined approach to designing and constructing assessments draws from evidence-centered design (ECD) (Mislevy & Haertel, 2006), which has gained widespread attention as a comprehensive approach for principled assessment design and validation. ECD emphasizes the evidentiary base for specifying coherent, logical relationships among the (a) learning goals that comprise the constructs to be measured (i.e., the claims articulating what students know and can do); (b) evidence in the form of observations, behaviors, or performances that should reveal the target constructs; and (c) features of tasks or situations that should elicit those behaviors or performances.

SIPS partners will use this iterative five-phase principled assessment design process to design assessments that align closely to the three-dimensional Next Generation Science Standards derived from the NRC Framework for K-12 Science Education.

In the first phase, Domain Analysis, we set out to articulate what information is important in a particular domain of science (e.g., biology, physics) and to systematically unpack NGSS performance expectations and synthesize the unpacking into multiple components called learning performances. The term learning performance draws from the work of Perkins (1998) and his notion of understanding performances as opportunities for students to showcase understanding through thought-demanding ways. It has been used more recently in curriculum and assessment design (e.g., DeBarger, Penuel, Harris, & Kennedy, 2015; Krajcik, McNeill, & Reiser, 2008). In our work, learning performances constitute knowledge-in-use statements that incorporate aspects of disciplinary core ideas, science practices, and crosscutting concepts that students need to be able to integrate as they progress toward achieving performance expectations. A single learning performance is crafted as a knowledge-in-use statement that is smaller in scope and partially represents a performance expectation. Each learning performance describes an essential part of a performance expectation that students would need to achieve at some point during instruction to ensure that they are progressing toward achieving the more comprehensive performance expectation. They collectively describe the proficiencies that students need to demonstrate in order to meet a performance expectation.

Adapted with permission from the SCILLSS project (SCILLSS, 2017c).

The key aspects of the domain, once defined in the first phase, are then organized and structured in the second Domain Modeling phase. This phase involves the principled application of design patterns (i.e., formal representations that address the recurring design problems in a particular domain of science). In assessment, a design pattern is used as a schema or structure for conceptualizing the components of the assessment argument and is structured around the identification of the focal knowledge, skills, and abilities (fKSAs) to be assessed; the identification of behaviors that provide evidence of the attainment of those fKSAs; and the features of the assessment items and tasks that elicit those behaviors.

The third phase, Conceptual Assessment Framework, builds on the Domain Modeling layer by continuing to organize and structure the domain content in terms of the assessment argument, but moving more toward the mechanical details required to develop and implement an operational assessment, such as the design constraints, administration conditions, and scoring rules.

In the fourth phase, Assessment Development, task template specifications are used to develop tasks and rubrics. The candidate tasks are then organized into parallel test forms, and test administration instructions are specified.

In the fifth phase, Assessment Delivery, “field” trials are administered to empirically evaluate the interactions of the students with the tasks and the test-level characteristics, as well as to evaluate the scoring and reporting mechanics.

References

Almond, R. G., Steinberg, L. S., & Mislevy, R. J. (2002). Enhancing the design and delivery of assessment systems: A four-process architecture. Journal of Technology, Learning, and Assessment, 1(5). Retrieved from http://www.jtla.org

DeBarger, A. H., Penuel, W. R., Harris, C. J. & Kennedy, C. A. (2015). Building an assessment argument to design and use next generation science assessments in efficacy studies of curriculum interventions. American Journal of Evaluation, 37(2). DOI: 10.1177/1098214015581707

Krajcik, J., McNeill, K. L., & Reiser, B. J. (2008). Learning-goals-driven design model: Developing curriculum materials that align with national standards and incorporate project-based pedagogy. Science Education, 92(1), 1-32. DOI: 10.1002/sce.20240

Mislevy, R. J., & Haertel, G. D. (2006). Implications of evidence-centered design for educational testing. Educational Measurement: Issues and Practice, 25(4), 6-20.

National Research Council (NRC). (2014). Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. https://doi.org/10.17226/18409.

Perkins, D. (1998). Do students understand understanding? The Education Digest, 59(5).

SCILLSS. (2017c). SCILLSS Resources. Strengthening Claims-based Interpretations and Uses of Local and Large-scale Science Assessment Scores. https://www.scillsspartners.org/scillss-resources/