Project Information

The SIPS Assessments project rests on an assessment design philosophy that is grounded in the Assessment Triangle (Pellegrino, Chudowsky, & Glaser, 2001) and the necessary coherence among its three elements: cognition, observation, and interpretation (Nichols, Kobrin, Lai, & Koepfler, 2017). We (a) carefully define the expectations for learning in relation to standards as well as to research on how students gain competency toward those standards and (b) design observations (assessment tasks) that can elicit information about students’ learning status and progress so that (c) this information can be interpreted and used to support and to evaluate student learning. Further, the SIPS Assessment project applies a common principled assessment design (PAD) approach to how we articulate expectations and design observations which begins with a deep analysis of the target domain for assessment and progresses through several steps that culminate in assessment delivery.

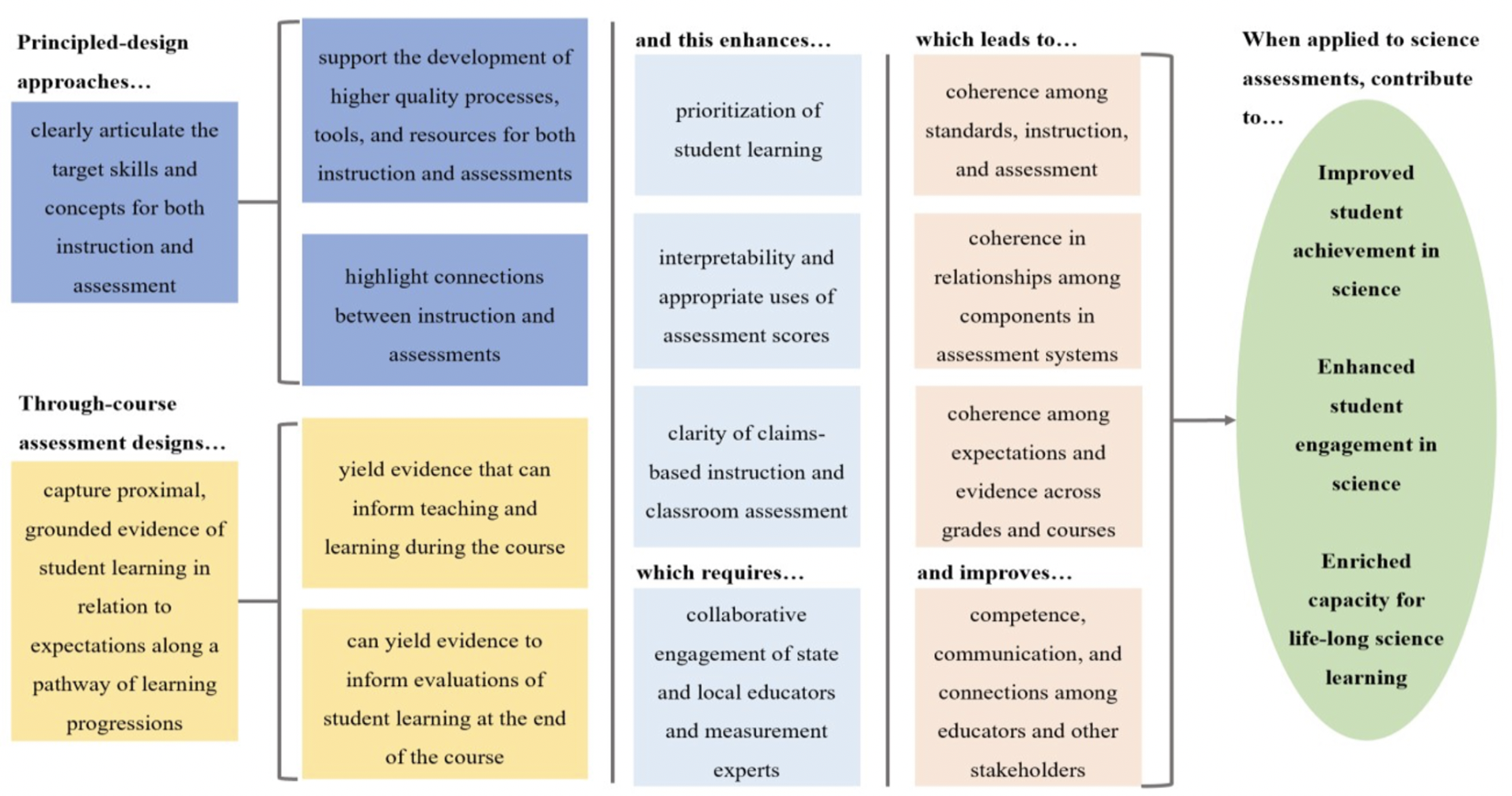

The Theory of Action that underlies our approach draws from two projects and from the broader research and practice literature. We believe in a coherent model of research-to-practice with rigorous evidence to support design and evaluation decisions.

The Theory of Action for SIPS

The SCILLSS project was funded for a 48-month period from 2017 through 2020 by an Enhanced Assessment Grant from the Office of Elementary and Secondary Education at the US Department of Education, awarded to the Nebraska Department of Education. The primary goals of SCILLSS were to strengthen the knowledge base among stakeholders for using principled assessment design (PAD) to create and evaluate quality science assessments that generate meaningful and useful scores, and to establish a means for states to strengthen the meaning of statewide assessment results and to connect those results with local assessments in a complementary system.

The NGSA project is funded by grants from the National Science Foundation, the Gordon and Betty Moore Foundation, and the Chan Zuckerberg Initiative. The NGSA collaborative team is comprised of experts in science and engineering education, K-12 assessment, technology-supported learning, and curriculum and instruction. NGSA’s purpose is to implement a PAD approach to develop technology-delivered classroom-ready assessment tasks, rubrics, and accompanying resources for science teachers to gain insights into their students’ proficiency with the Performance Expectations of the NGSS.

- Apply the SCILLSS and NGSA principled design approach to create tasks that are administered across the school year (rather than at the end);

- Embed the tasks within Understanding by Design (UbD; McTighe & Wiggins, 1998) curriculum maps to establish a clear link between instruction and assessment;

- Be portable across a range of learning locations by engaging students in an interactive assessment process with rich phenomena and a performance-based design; and

- Expand benefits of SCILLSS and NGSA to several new states and their educator networks.

Project Design

To address project objectives, SIPS is organized into six phases that contribute to the development of state-specific and generalizable deliverables. Phase timelines overlap significantly across the 36-month project period. Collaboration is a key aspect of each SIPS project phase. This includes interstate collaboration; collaboration among state and local educators; collaboration among measurement practitioners and front-line educators to target all aspects of assessment implementation; and state collaboration with independent technical support providers.

Phase 1

Includes project management activities to ensure that SIPS is managed appropriately and a Theory of Action that lays out a framework that can be tailored to specific contexts.

Phase 2

Includes the development of claims, measurement targets, and performance level descriptors and year-long curriculum planning tools and templates to inform curriculum and assessment (item and test) development procedures and determine what the assessment scores are meant to reflect.

Phase 3

Involves the creation of prototype Understanding by Design (UbD) curriculum units and common assessments at grades 5 and 8 that will focus on the knowledge, skills, and abilities expected of students after each quarter of instruction as specified by the learning and curriculum framework.

Phase 4

Focuses on classroom assessment development workshops to build educator capacity to develop formative science assessment tasks.

Phase 5

Involves the piloting of the prototype curriculum and common assessments to gauge the quality and useability of the prototype UbD curriculum units and common assessments at grades 5 and 8 and to inform revisions to the units and assessments.

Phase 6

Involves project evaluation and reporting to evaluate progress, guide next steps, and provide useful reports and the development of SIPS measurement models, dissemination plans, and specifications for a web-based delivery platform.

References

National Research Council (NRC). (2014). Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. https://doi.org/10.17226/18409.

National Research Council (NRC) (2012). A Framework for K-12 Science Education: Practices, Crosscutting Concepts, and Core Ideas. Washington, DC: The National Academies Press. https://doi.org/10.17226/13165.

Nichols, P. D., Kobrin, J. L., Lai, E., Koepfler, J. D. (2017). The role of theories of learning and cognition in assessment design and development. A. A. Rupp & J. P. Leighton (Eds.) The Handbook of Cognition and Assessment: Frameworks, Methodologies, an Applications, First Edition, pp. 41-74. New York: Wiley Blackwell.

Pellegrino, J. W., Chudowsky, N., & Glaser, R. (2001). Knowing what students know: The science and design of educational assessment. Washington, DC: National Academy Press.